Is good data-driven decision-making possible?

Tuesday, October 7, 2008 at 08:44PM

Tuesday, October 7, 2008 at 08:44PM Is data-driven decision-making possible in schools? I've long worried about it and am glad to hear Chris Lehman echo this concern in his excellent presention (excellent because I agree with almost everything he says?) at Ignite Philly:

Chris, you're singing the librarians' old songs about research and problem-based learning and presentation and authenticity. Great!

But back to data-driven decisions. Why are these nearly impossible to make well at a school level? From an earlier column, A Trick Question:

At last spring’s interviews for our new high school library media specialist, the stumper question was:

"How will you demonstrate that the library media program is having a positive impact on student achievement in the school?"

How did that nasty little question get in there with “Tell us a little about yourself” and “Describe a successful lesson you’ve taught”? Now those questions most of us could answer with one frontal lobe tied behind our cerebellums.

Given the increased emphasis on accountability and data-driven practices, it’s question all of us, librarians and technologists alike, need to be ready to answer - even if we are not looking for a new job or don’t want to be in the position of needing to look for one.

While I would never be quick enough to have said this without knowing the question was coming, I believe the best response to the question would be another question: “How does your school measure student achievement now?”

If the answer was simply, “Our school measures student achievement by standardized or state test scores,” I would then reply, “There is an empirical way of determining whether the library program is having an impact on such scores, but I don’t think you’d really want to run such a study. Here’s why:

- Are you willing to have a significant portion of your students (and teachers) go without library services and resources as part of a control group?

- Are you willing to wait 3-4 years for reliable longitudinal data?

- Are you willing to measure only those students who are here their entire educational careers?

- Are you willing to change nothing else in the school to eliminate all other factors that might influence test scores?

- Will the groups we analyze be large enough to be considered statistically significant?

- Are you willing to provide the statistical and research expertise needed to make the study valid?"

If test scores are the sole measure of "student achievement," there are indeed some things we in schools can be excellent at doing with data. We can identify individual students who perform below established norms and we can look at groups of students with certain characteristics (ELL, FRP, SpEd) and see how they compare with the norms. We can do trend tracking of such groups.

We are good at determining which groups need help. But what comes next is the "gotcha."

Schools are unwilling and unequipped to do controlled studies on the effectiveness of any single intervention over a period of time to improve the test scores of a school or group. The typical pattern is to throw as many changes into a curriculum as possible and hope something sticks.

Let's say our SpEd population is showing low reading scores. A building may well decide to:

- Increase the use of differentiated instruction

- Try a new computerized reading program

- Increase the SSR program

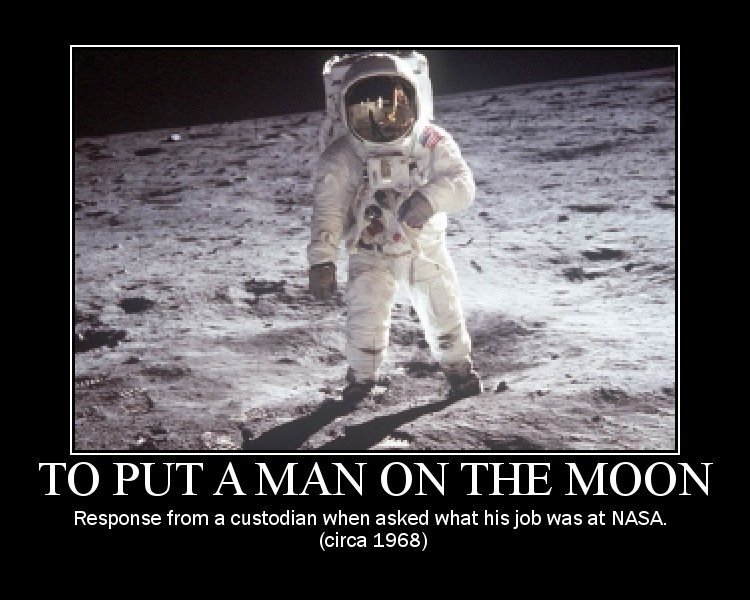

Schools should not be tasked with doing research. This was what university lab schools are (were) for. Every school doing its own research on effective educational practices makes no more sense than every hospital being a research hospital and every student being a guinea pig.

I am not sure what the answer to this problem might be nor has anyone to date given me a good solution to this problem (if even willing to admit a problem exists.) I do believe that carefully applied valid research can help teachers improve their instructional practices.

It just shouldn't be up to the practitioner to also be a researcher.

Your thoughts?

Leading good

Leading good